I have been thinking about the recent release of iPhone OS 3.1 Beta 3 to the iPhone development community and the fact that there is now the framework and APIs for “augmented reality” applications available for programmers. What are “augmented reality” apps? Basically, applications that use the iPhone’s camera as well as GPS, compass and accelerometer to produce visual interactivity based on what the camera “sees.” What that means is you could point your iPhone at a city street, for example, and within your iPhone’s display, you would see store information or business that are in a building that the phone is “looking at.”

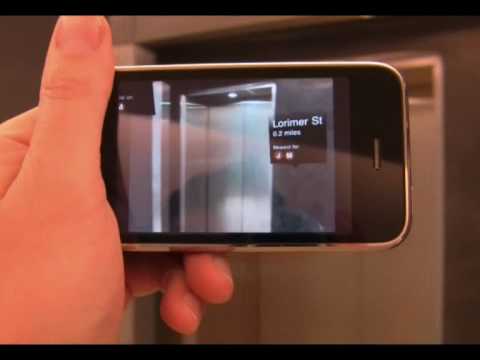

Recently, there has been a lot of hype around a New York Subway app called “Nearest Tube” by developer Acrossair. Their applications allows you to “look” around the various tunnels in the subway, and as you do so, you are given visual indicators as to where certain street exits are or train tube entrances. If you take a look at the video below, you can get a feel for what it is all about.

This opening of these APIs (Application Programming Interfaces) means that developers now can essentially look beyond what is simply contained within the device. The camera becomes an eye to the outside world.

So what will become of this? That really depends on how open Apple will be and how much of the underlying infrastructure will be available for developers to interact with…there are some fantastic possibilities. Let’s work through the logic here:

- You can get outside video on the iPhone screen.

- You can also get glasses that let you display your iPod movies and videos.

- Why not take that next step and get the video from the iPhone all the way up to the glasses, but run the captured video through various augmented reality apps on the iPhone prior to it reaching the glasses.

For example, a company like Vuzix could produce eyewear that allows for the pass-through video from the iPhone’s camera to be displayed (beyond simply sending video from the iPod application). They have already announced a product, the Wrap 920AV, which has a “see-thru” display, allowing you to view with the outside environment which is supposed to be available in the Fall 2009. It does look like this company has been really thinking through the full-immersion and interactive “perspective”. They will be offering 2 optional accessories, namely a “tracking system” called 6DoF (Six Degrees of Freedom) that lets you move your viewing perspective up/down/forward/back/left/right/rotate, and a “Stereo Camera Pair” which is a USB-connected item that allows the overlay of real 3D items with generated 3D.

I started brainstorming on some possible application ideas. Some might be feasible, others might simply not be possible:

- GPS Navigation – Think if a company like Tom Tom would be able to put GPS directions directly within your line of sight, instead of having to constantly look down at a GPS or your iPhone, a distracting action. It could simply be an enhancement to a regular GPS program, where arrows are overlaid within the glasses to show simple turn directions as you look down the road.

- Enhanced Perspective – Perhaps a walking tour application that works with a GPS and the camera could be integrated with Wikipedia to get geographic and historical information. Essentially as you walk in a certain city or historical area, information about buildings, structures, artwork, etc. could be provided, given you more of a docent-type of tour. This type of application could potentially be useful within a museum for enhanced tours.

- Social Networking App – Let’s imagine a “Google Latitude Streetview” type of implementation where you can identify where people are in a city by visually showing pushpins of your friends or connections in relation to where you are looking. As you walk around, pushpins (or faces or avatars) could grow or shrink based on your proximity to those people.

- Gaming – How about a visual battlefield game where your surroundings are the battlefield and have overlays of animation using what you are seeing. Somehow you would have to use the iPhone as a controller yet use the visual output for game play.

- Virtual Teleprompter – People who deliver speeches or who are doing live reporting could get real-time information sent to their glasses. This would eliminate the need to have hand-written speech cards or constant buzzing in a reporter’s ear of people conveying information.

However, there are many dependencies and unknowns which would have to be figured out like how to capture the video input as you look without having to physically tape your iPhone to your head. The items that I suggested above simply may not be possible with the processor and APIs available within existing devices. There would also be, of course, the liability of having these interactive applications potentially distracting one’s attention and obstructing views. Some other unknowns:

- Glasses would have to be able to send and receive video and other data (e.g., movement, embedded compass).

- Could this be accomplished via BT or would have to be tethered?

- Would the TX/RX speeds be fast enough to make the experience positive?

Perhaps at the end, we will start calling it an “eyePhone” instead of an iPhone. Regardless, I’m encouraged by Apple allowing for applications like this to now happen with the availability of “augmented reality”. Assuming that the devices can handle the amount of data processing required AND the network can handle the potentially vast amounts of data being received and transmitted, I believe there are some really interesting possibilities in the short term horizon.

The really robust things are probably a few years out, but I think that we will start seeing entry-level apps using HUDs (Heads Up Displays) in the next few months. These types of displays are already available within the Military and even in some higher-end autos. It just now has to make it to the mobile consumer user.

HTD says: I think HUDs will be extremely useful in the future, and that future is really upon us now, from a consumer level.